For many Indians, voice typing can feel unreliable. A sentence spoken clearly doesn’t always come back the same way on screen. Accents shift. Words are misunderstood. Regional expressions get flattened into something else.

A recent nationwide study has taken a closer look at why that might be happening.

The benchmark, called Voice of India, examined how major speech recognition systems perform across 15 Indian languages. It relied on recordings from more than 35,000 speakers. People spoke naturally — mixing languages mid-sentence, speaking over background sounds, using local expressions.

Benchmark Reveals Wide Accuracy Gaps Across Indian Languages and Dialects

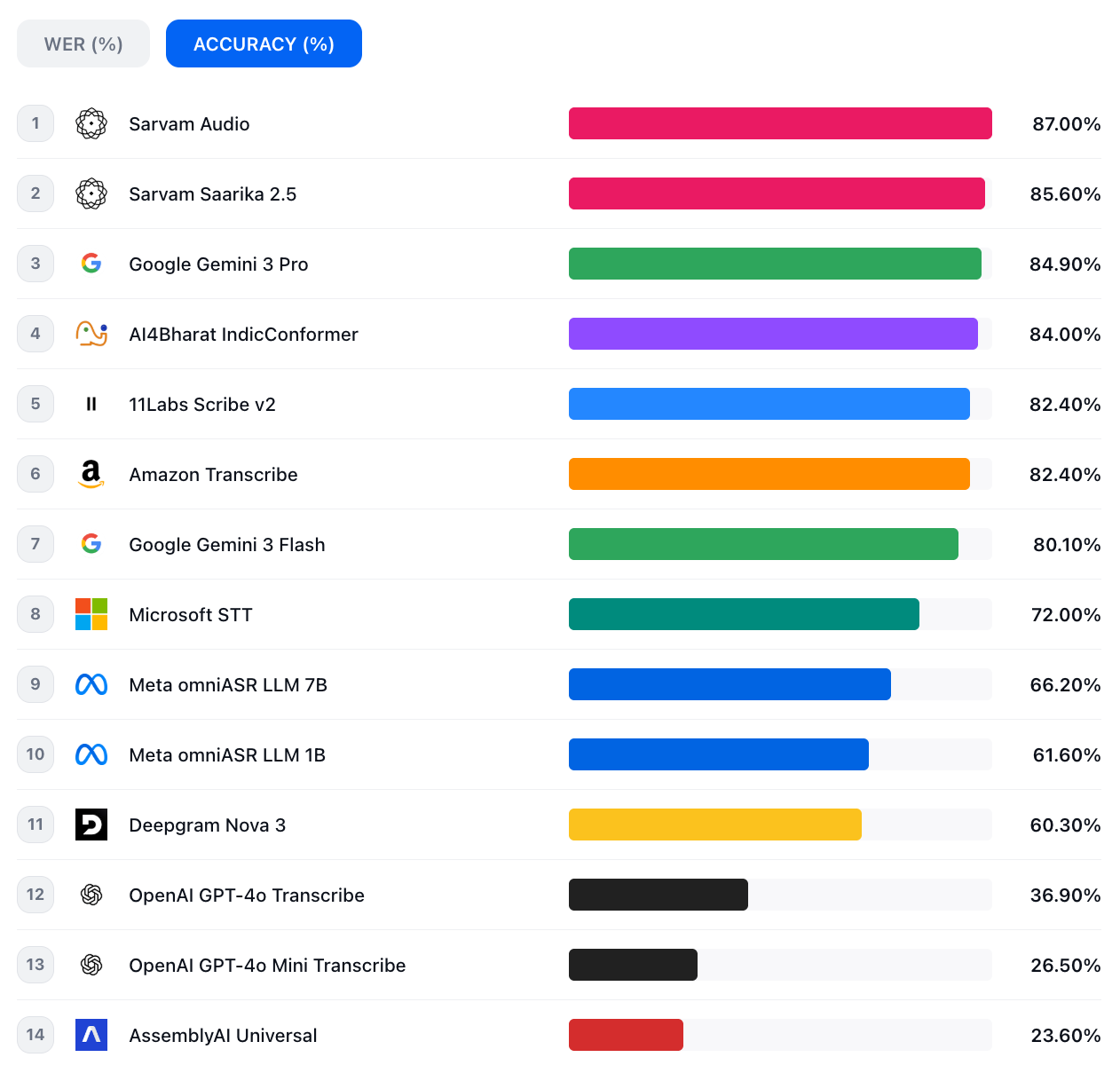

When those recordings were tested, the gap between systems became visible.

OpenAI’s transcription models showed error rates above 55 percent on Indian speech samples. In Maithili and Tamil, close to two-thirds of words were transcribed incorrectly. In Urdu, the error rate reached 35.4 percent. Sarvam Audio, evaluated under the same framework, recorded 6.95 percent.

Dialects presented an even tougher test. Bhojpuri, spoken widely across northern India, proved challenging for several global systems. In both Bhojpuri and Maithili, some models struggled to interpret a large share of spoken words accurately.

Meta’s systems showed higher error levels in Tamil and Malayalam compared to Indian-developed tools. Microsoft’s speech-to-text platform did not support six of the 15 languages assessed, including Punjabi, Odia and Kannada.

Across the evaluation, Indian-built systems delivered steadier results. Google’s Gemini models remained competitive in several languages, while OpenAI’s GPT-4o trailed Sarvam in overall average accuracy.

Sarvam ranked first or second across most languages tested — including Hindi, Bengali, Odia and Assamese — and crossed 93 percent accuracy in certain regional dialects.

The study also looked at whether larger models improved performance. Meta’s 7B parameter version showed only limited gains compared to its smaller 1B model across Indian languages.

What stands out is how the dataset was assembled. Around 2,000 speakers per language contributed recordings from different regions, age groups and social backgrounds. Code-switching — such as Hindi-English or Tamil-English — was part of the mix. Informal speech and environmental noise were not removed.

Researchers say the purpose was to reflect how people actually speak. Many global speech systems are trained primarily on English-dominant datasets. When applied to India’s linguistic landscape, performance differences become more apparent.

The findings suggest that speech technology still has room to grow if it aims to serve India’s full diversity.

Also Read: OpenAI Introduces Age Prediction on ChatGPT to Strengthen Teen Safeguards