Adobe has broadened its Firefly creative AI platform with a new range of tools and models covering audio, video, and imaging, marking another step in its effort to embed generative technology into professional creative workflows.

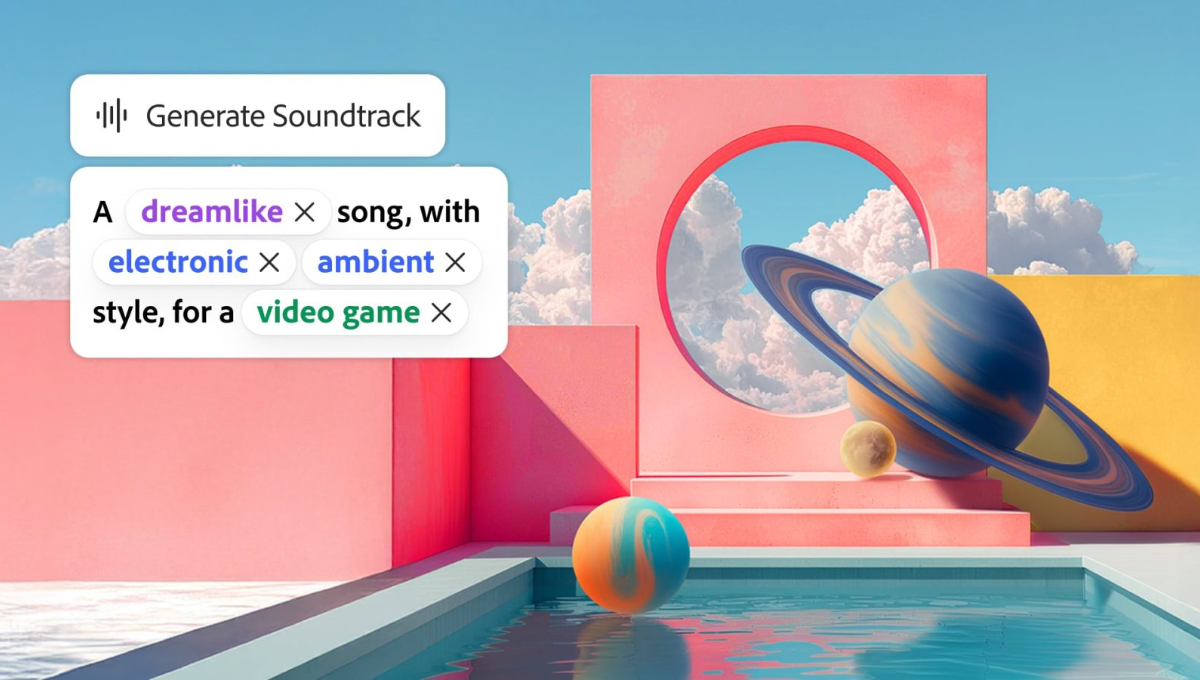

Revealed at the annual Adobe MAX conference, the latest features include Generate Soundtrack, which creates royalty-free background music; Generate Speech, for producing realistic multilingual voiceovers; and a timeline-based video editor that lets users craft and arrange clips with the help of AI.

With these updates, creators can now work entirely within Firefly, combining generated and uploaded content using both text commands and visual editing tools.

Adobe also introduced Firefly Image Model 5, capable of generating high-resolution 4MP photorealistic images and supporting natural-language-driven edits through a new “Prompt to Edit” option. The company said the model provides enhanced realism in lighting, texture, anatomy, and complex scene composition.

The Firefly ecosystem has also been expanded through integrations with partner technologies from ElevenLabs, Google, OpenAI, Topaz Labs, Luma AI, and Runway — creating one of the most comprehensive creative AI environments in the market. In addition, Adobe is extending access to Firefly Custom Models, enabling users to train private models tailored to their unique artistic styles.

Another innovation, an experimental feature called Project Moonlight, introduces a conversational AI assistant that can analyse a creator’s social activity and projects to help develop and refine ideas into finished pieces.

Most of the new capabilities — including Firefly Image Model 5, Generate Soundtrack, and Generate Speech — are now open for public beta testing, while the video editor, Custom Models, and Project Moonlight remain in private beta for select users.

Also Read:

Mphasis Rolls out Quantum Safe AI-Powered Enterprise Tools

NVIDIA to Invest $1 Billion in Nokia in Strategic Partnership for AI and Data Center Development