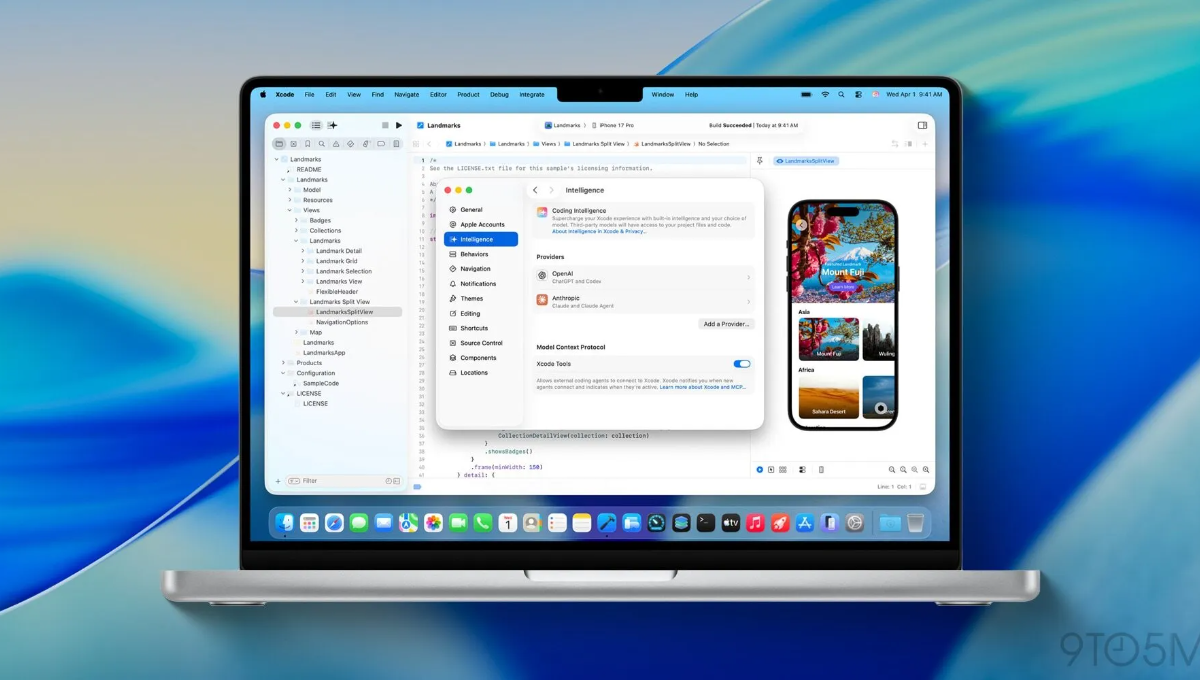

Apple has introduced agentic coding in Xcode 26.3, signaling a major upgrade in how developers can build apps on Apple platforms. The update allows autonomous AI coding agents—such as Anthropic’s Claude Agent and OpenAI’s Codex—to operate directly within Xcode, helping developers handle complex development tasks more efficiently.

Xcode 26.3 is available as a release candidate starting February 4 for members of the Apple Developer Program, with a broader public rollout expected soon via the App Store.

Apple said these AI agents can work collaboratively across the full app development lifecycle, helping developers move faster from idea to deployment.

AI Agents Take Over Core Development Tasks in Xcode

According to the company, agentic tools can search technical documentation, navigate file structures, update project configurations, and validate work by running builds and capturing Xcode Previews. This reduces repetitive manual work and allows developers to focus on higher-level problem solving.

“Agentic coding supercharges productivity and creativity, streamlining the development workflow so developers can focus on innovation,” said Susan Prescott, Apple’s Vice President of Worldwide Developer Relations.

The latest release builds on intelligence features first introduced in Xcode 26, which brought AI-powered assistance for writing and editing Swift code. With Xcode 26.3, developers can choose between built-in integrations with Claude Agent and Codex, or connect other compatible AI tools using the Model Context Protocol, an open standard that enables third-party agents to integrate seamlessly with Xcode.

Separately, Apple has continued to strengthen its artificial intelligence capabilities through the acquisition of Q.ai, an Israeli startup specialising in imaging and machine learning.

Q.ai’s technology enables devices to interpret whispered speech and improve audio quality in noisy environments—features that could enhance products like AirPods, where Apple has already introduced AI-driven capabilities such as live translation.

The startup has also developed systems that detect subtle facial muscle movements, a technology that could be applied to improve user interaction on Apple Vision Pro, as Apple pushes further into the next phase of AI-powered computing.

Also Read: OpenAI Acquires Torch to Enhance ChatGPT’s Health Features