OpenAI’s annualised revenue has moved past $20 billion in 2025, a steep increase from roughly $2 billion in 2023, as demand for its AI products continues to rise alongside a rapid expansion of computing infrastructure.

Chief financial officer Sarah Friar said the company does not view revenue growth as a standalone target. Instead, OpenAI links its financial performance to how much practical work users are completing with its systems, allowing real usage to shape monetisation.

The connection becomes visible when looking at infrastructure. Over the past two years, OpenAI has significantly expanded its computing capacity, increasing total compute to about 1.9 gigawatts in 2025 from around 0.2 gigawatts in 2023. Revenue growth followed a similar pattern as capacity increased.

Friar acknowledged that earlier limits on compute likely slowed adoption. With more infrastructure now available, OpenAI has been able to support a larger user base and more demanding workloads across different sectors.

Compute Expansion Drives Revenue Growth

User engagement has also reached a high point. Both daily and weekly activity levels continue to climb as ChatGPT becomes part of regular work rather than a tool used only for experimentation.

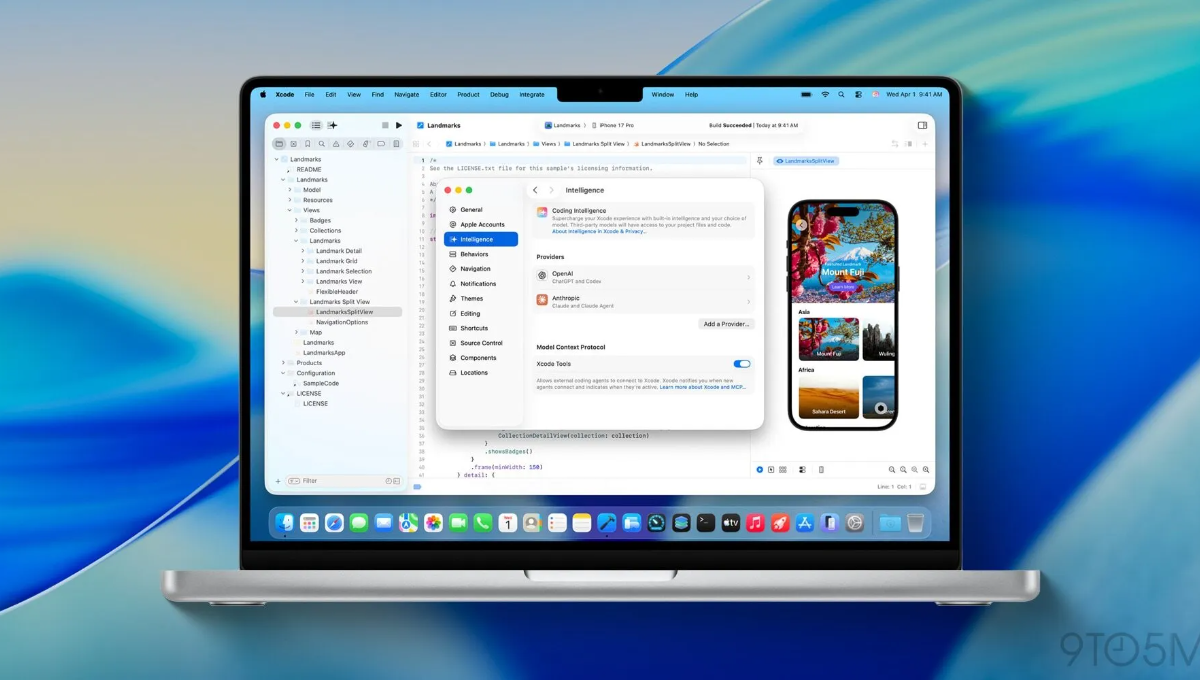

Originally released as a research preview, ChatGPT is now widely used in education, writing, software development, marketing, and finance. This shift has influenced OpenAI’s commercial strategy. The company began with individual subscriptions before rolling out plans for teams and large organisations. Developers were brought in through usage-based API pricing, where costs rise only when tools are actively used.

As AI tools became embedded in workplace routines, OpenAI adjusted its pricing structure to better reflect actual output rather than simple access.

More recently, the company has started introducing advertising and commerce-related features within ChatGPT. These additions are designed for users who want to move from research to action. Friar said such features are clearly marked and added selectively, with usefulness taking priority.

Infrastructure remains a central concern for the company. Friar described compute as one of the most constrained resources in AI. To reduce dependency risks, OpenAI has moved away from relying on a single provider and expanded its network of infrastructure partners.

In January, OpenAI signed a $10 billion agreement with chipmaker Cerebras Systems, signalling a stronger focus on inference capacity needed to support large-scale, real-time usage.

Looking to 2026, the company expects growth to come from practical deployments rather than experimentation. Areas such as healthcare, scientific research, and enterprise operations are expected to play a larger role. OpenAI is also exploring revenue models beyond subscriptions and APIs, including licensing agreements, intellectual property deals, and pricing tied to measurable outcomes.

As AI tools are applied more deeply in fields such as drug research, energy systems, and financial analysis, OpenAI expects its business model to continue evolving alongside real-world use.

Also Read: OpenAI Bets on Brain-Computer Interfaces Through Merge Labs Investment